TDP

Long live TDP!

We welcome with great pleasure and interest the release on 14 April 2022 of the Trunk Data Platform (https://trunkdataplatform.io/), which is "the [Hadoop] distribution co-constructed by EDF & DGFIP (Direction Générale des Finances Publiques), via the TOSIT association".

This is excellent news for several reasons:

We feel less alone! Because for almost 3 years now, and since the acquisition of Hortonworks by Cloudera, LINAGORA has been developing an alternative stack called "LDP for LINAGORA Data Platform" on behalf of several major customers, to ensure continuity of use and support for Hadoop technologies in mission-critical environments;

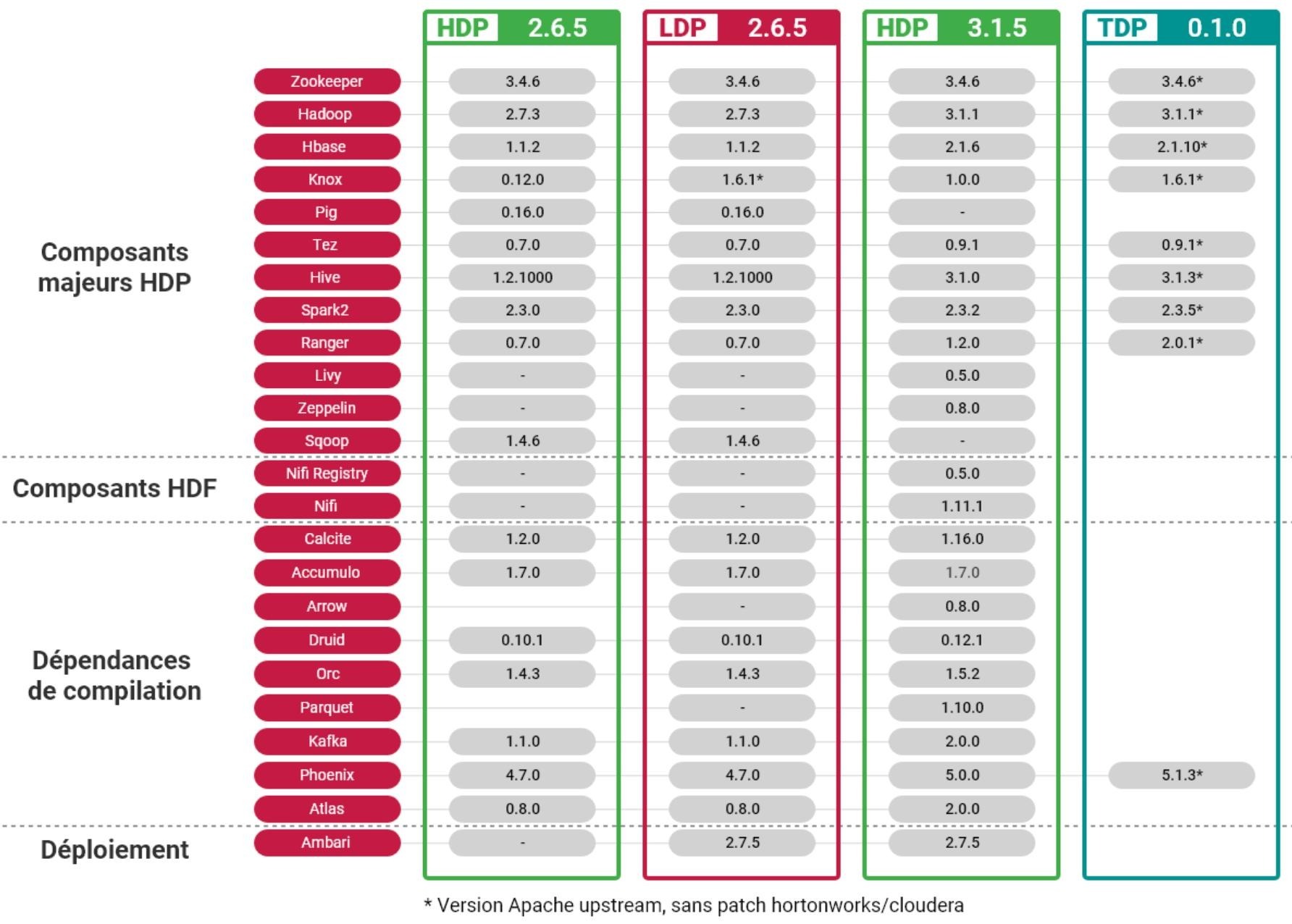

LINAGORA's work is based on the source code of the HDP and CDP stacks (still available to date) in versions 2.6.x and 3.1.x, while offering software assurance based on its OSSA offering (https://www.08000linux.com/). The deliverables supplied to our customers are rpm packages built primarily for alternative distributions to RHEL (CentOS or AlmaLinux);

We took part in the discussions at the origin of TDP with the DGFIP over a year ago. The common and shared objective is to enable the emergence of an alternative stack designed to pool and consolidate efforts to industrialise and maintain a sovereign open source Big Data stack that is not dependent on a single player. The first brick has been laid... ;

We see TDP as a way of converging to "rebase" our work on the Apache version of the various components while also observing the work being done on other stacks: Apache Bigtop (https://bigtop.apache.org), Bigdate for OpenPower (https://github.com/OpenPOWER-BigData) etc...

We have taken the time to look at the information published by the TOSIT community (https://tosit.fr). Here are the main elements of our analysis:

TDP is based on the Apache versions of the components made available by the ;

To date, there have been no patches/modifications/functional contributions/security corrections to the component code. It's a case of "take it as it is" on the basis of community versions. This means that all the market players interested in the subject have the same starting point with regard to TDP's expertise, since it is the Apache versions that are used;

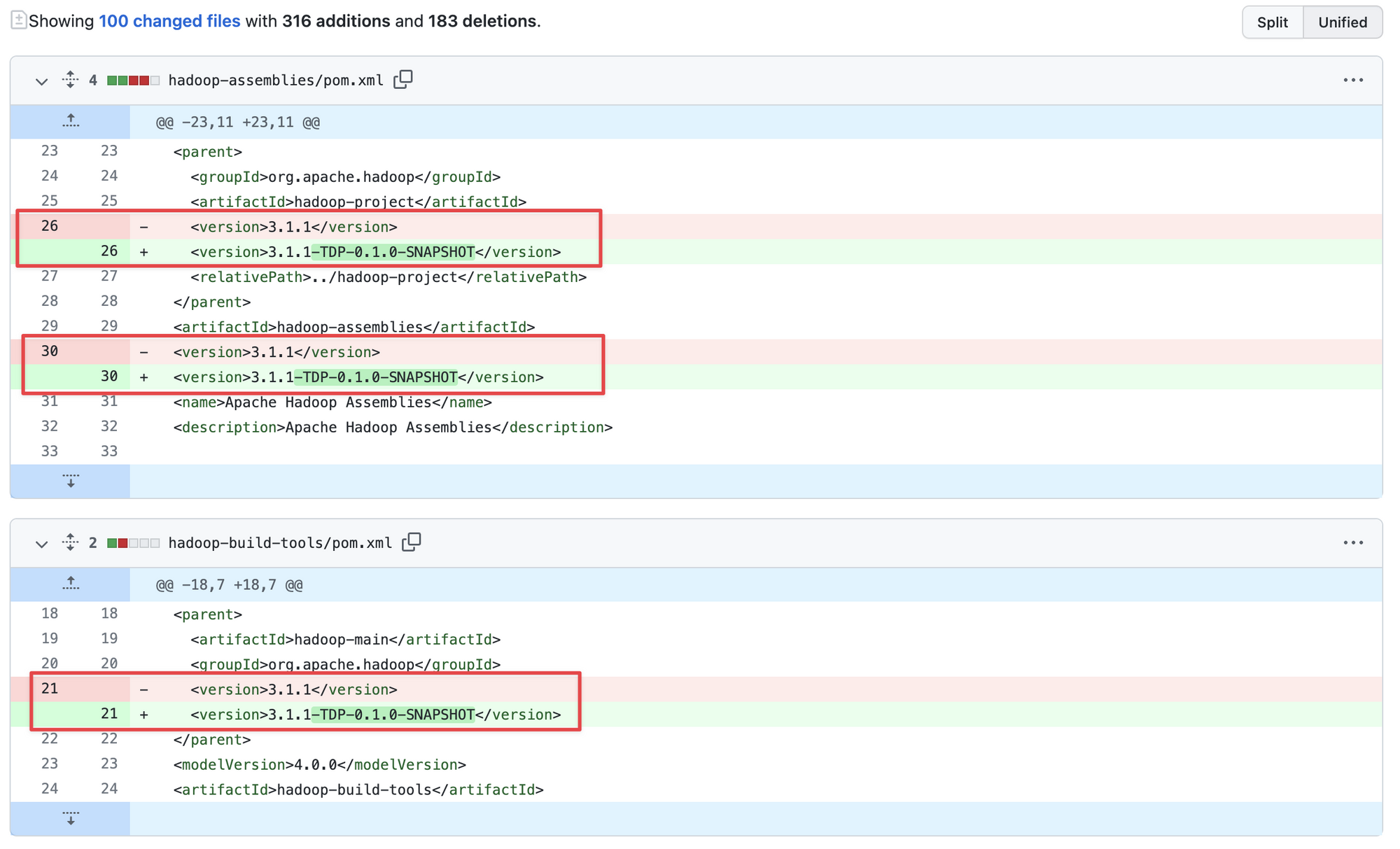

The vast majority of line additions/deletions in commits concern version renaming and the creation of build jenkins jobs;

work has been contributed on deployment mechanisms (without RPM packaging at present) with the supply of executable deliverables in the form of tar.gz to be deployed on the basis of Ansible playbooks;

The versions selected by TDP are consistent with what we produce for builds carried out for our customers;

From a legal point of view: no modification of the code and the licences are retained, so it's Apache v2.

The points of difference or those that caught our attention :

To date, only 11 components are distributed. Our current customers require additional components that are used in their specific applications;

We question the form of packaging chosen (tar.gz deployed with Ansible) which, in our opinion, is not optimal in a critical production context. We recognise the fact of being agnostic of the Linux distribution used, but the non-management of dependencies at the time of deployment or the simplified management of updates offered by an industrial packaging system such as rpm seems to us to be lacking in TDP.

The majority of contributors (9 to date on the main repositories) come from just one company. Remember that one of the aims of these alternative stacks is to break the Cloudera deadlock and no longer be dependent on a single company...

We have already approached the various players involved in TDP with a view to making our first contributions in the very near future. Through this work, I hope to demonstrate LINAGORA's ability to :

Take part in this initiative, which we support and believe in;

Supporting TDP through our OSSA offer: in fact, the services expected by our customers are to provide MCO (Maintaining Operational Conditions) and MCS (Maintaining Security Conditions) with strong service level commitments. The only proof of this capability is having already made contributions to the project;

Validate the functioning of the pull requests, the reception of the community and the governance of the TDP project led by the TOSIT association;

And finally, to validate that the primary objective is not to depend on a single company to contribute code to the various TDP components (core software and deployment technology).

Over the next few days, we plan to make at least two contributions:

The addition of a major component to TDP such as Sqoop or Nifi;

The contribution of a security patch to one of the core components.

In the longer term, the discussions initiated with the DGFIP and the other TDP players should, in our view, provide answers to some major questions:

Provide a user-friendly tool for managing the deployment of Hadoop clusters in order to simplify their monitoring (observability) and day-to-day operation, replacing the Ambari brick;

Define the functional roadmap in terms of changes to core components, taking advantage of the efforts of the Apache community and others;

Envision and prepare for the "post-Hadoop era" with the rise of data factory principles (in addition to data warehouses) and the advent of data streaming technologies such as Kafka (https://kafka.apache.org) or Pulsar (https://pulsar.apache.org).

In any case, we're delighted once again that TDP has been published, and we'll be contributing code to the project over the next few days.

Long live TDP... and don't get your feet stuck in the elephant's trunk ;-)