In a context where generative AI is being rapidly deployed in various fields, it poses major challenges for digital security. The ability of this technology to produce realistic content opens up new prospects, but also potential vulnerabilities. AI adds new challenges, both positive and negative.

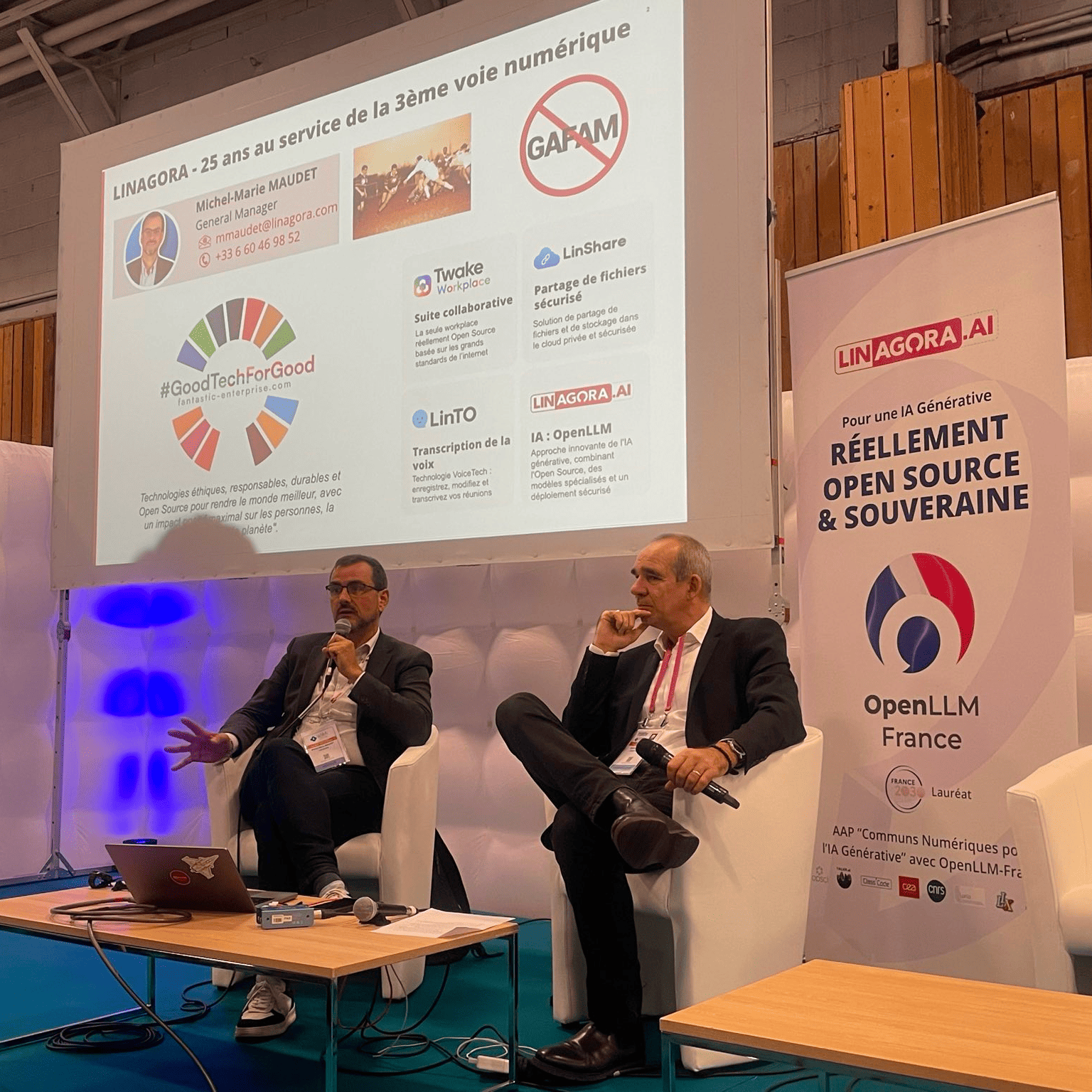

Invited by Fabrice Frossard, Michel-Marie Maudet, Managing Director and Co-founder of LINAGORA, spoke at the opening plenary session about the impact of its generative AIs on cybersecurity at the latest edition of the Cloud + Security Forum.

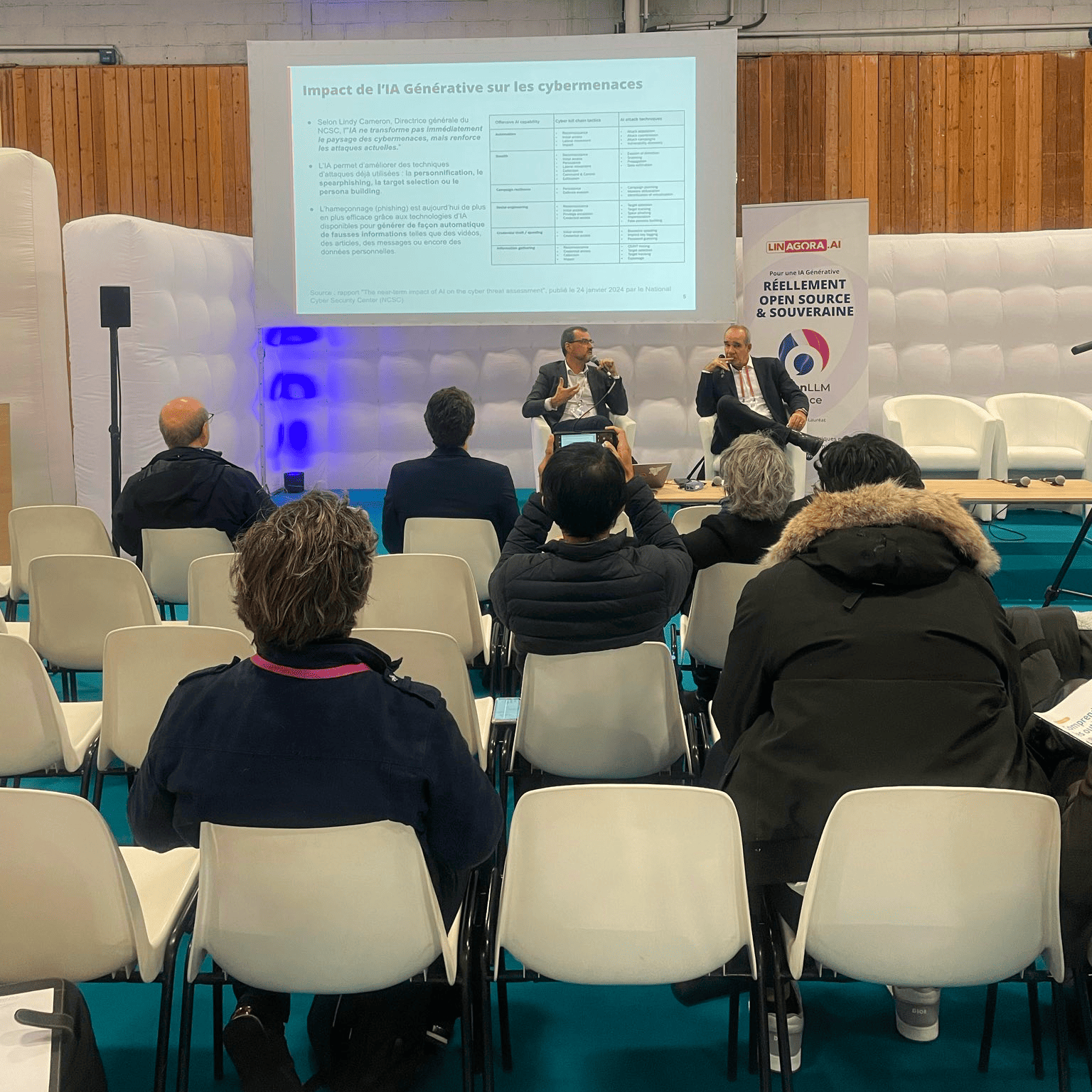

The changing cyberthreat landscape in the age of generative AI

The advent of generative artificial intelligence has significantly altered the cyberthreat landscape, lowering the barriers to entry for cybercriminals and significantly increasing the frequency and intensity of attacks. The availability of services such as Ransomware-as-a-Service (RaaS) allows a growing number of actors to launch sophisticated attacks without having advanced technical skills.

As Michel-Marie MAUDET of LINAGORA explains:

"For just a few euros, since we're talking really small sums, you can have completely automated attack mechanisms.".

For around $40 a month, these services offer programmable bots to target victims, with flexible payment models (subscriptions or gainsharing). For example, if an attack yields $100,000, 70% goes to the user and 30% to the criminal provider, rather like a legitimate platform. These offers make it possible to launch attacks without technical expertise and at very low cost.

According to the ANSSI 's 2024 report, incidents are increasing by 30%, with around 1,000 incidents reported. AI facilitates the speed and scale of attacks, making ransomware and phishing attacks more accessible and quicker to deploy.

The democratisation of tools such as voice morphing and deep fakes poses new challenges for cybersecurity professionals, who have to deal with an increase in the volume and sophistication of threats.

Michel-Marie Maudet recommends two main resources for finding out about and countering these attacks: firstly, the ANSSI guides and secondlyOWSAP (a foundation dedicated to security).

The ANSSI guide outlines the lifecycle of AI in the enterprise, from development to operation, and includes 35 detailed recommendations. Three sections are identified:training (to be avoided without preparation, according to the speaker), initial deployment and day-to-dayoperation. The ANSSI highlights a number of common-sense rules, including integrating security from the start of the lifecycle and avoiding ‘shadow AI’ (uncontrolled AI tools). Securing the supply chain is also essential, with code libraries thoroughly checked before they are used in production. One notable recommendation also concernssecure hosting (SecNumCloud), which imposes costly conditions on government agencies, particularly in terms of secure GPUs, which are in short supply in France. The ISO 42001 standard on the management of AI in information systems should also be noted.

Also, the OWASP Foundation , known for its Top 10 vulnerabilities in web applications, has launched a similar ranking for generative AI and language models (LLM) in 2023. Although technical, this Top 10 identifies the main risks in AI, and here are the two main ones.

- Prompt injection: this involves including insecure commands in a language model to alter its behaviour. For example, a user could inject hidden instructions into a prompt to obtain sensitive information. This is dangerous because poorly protected models do not filter data correctly.

- Leakage of sensitive information: without adequate protection, a model could regurgitate private information when queried. This is a particular problem for companies using RAG (Retrieval-Augmented Generation), where the AI model accesses the company's databases to enrich its answers. For example, if a business model can consult a knowledge base to answer ‘Who founded LINAGORA?’, it will search internal documents to provide an accurate answer. This reduces hallucinations andenables information to be sourced, but the model remains vulnerable to data extraction attacks.

Finally, to protect yourself, it is important to configure security at model level (filtering, access control) and at data level to limit unauthorised access to sensitive information.

The security challenges associated with implementing generative AI in businesses

The integration of generative AI into corporate information systems raises new security challenges.

One of the major issues is the protection of sensitive data whenusing language models (LLM) and techniques such as RAG (Retrieval Augmented Generation).

Michel-Marie MAUDET warns:

"A model contains knowledge... And if you ask it a question, it makes this knowledge available to anyone.

So this means that the connected application that is going to use the LLM absolutely has to do this filtering and security management work.".

To deal with this kind of problem, Michel-Marie recommends the use of tools to detect and ‘anonymise’ personal data in model prompts and responses. These solutions can be found in Open Source AI models:

"Now, with OpenLLM, you can create your own alternatives! ",

Michel-Marie will also give a concrete example of an AI architecture using LLM and RAG. The demonstration highlights ‘how these vulnerabilities can be exploited’. The idea is to apply certain recommendations to a typical AI application architecture, incorporating LLM combined with RAG. A solution similar to the one deployed for LINAGORA customers. This raises awareness of specific risks, such asdata poisoning orinformation extraction via ‘prompt injection’ type attacks, which are particularly easy to execute.

The opportunities offered by AI in the field of cybersecurity

Despite the increased risks, generative AI also offers new opportunities to strengthen cybersecurity. Security vendors and specialist companies are increasingly integrating AI into their tools to improve threat detection and response.

Michel-Marie MAUDET identifies four main trends in this area:

- SOC automation: AI agents help operators monitor user behaviour and logs, which are essential for complex information systems.

- Action automation and remediation: AIs can now automate tasks such as data collection or responding to security alerts, for example by closing vulnerable ports if abnormal behaviour is detected on the network.

- Enhanced defence in depth: AI monitors internal flows and network security zones to identify potentially malicious behaviour, beyond traditional protections such as firewalls.

- Sharing knowledge via OSINT: Sharing information on attacks and vulnerabilities, enhanced by AI, increases the resilience of the entire sector through the aggregation and analysis of accessible security information.

These advances enable faster, more accurate analysis of threats, as well as a more effective response to security incidents.

To stay informed and connected to the challenges of Artificial Intelligence in Cybersecurity, follow the ANSSI's MOOC and adopt good digital practices, which are essential for securing our systems, whether or not they include generative AI.