It is the question explored by Journal du Net in an article signed Benjamin Polge, to which our chief executive Michel‑Marie MAUDET contributed.

A very concrete subject, yet rarely treated with such precision: how to correctly size a local inference infrastructure for a large language model (LLM) without over‑investing or throttling performance?

In this article, Michel‑Marie MAUDET reminds us that the hardware requirements of an AI model do not depend solely on its size.

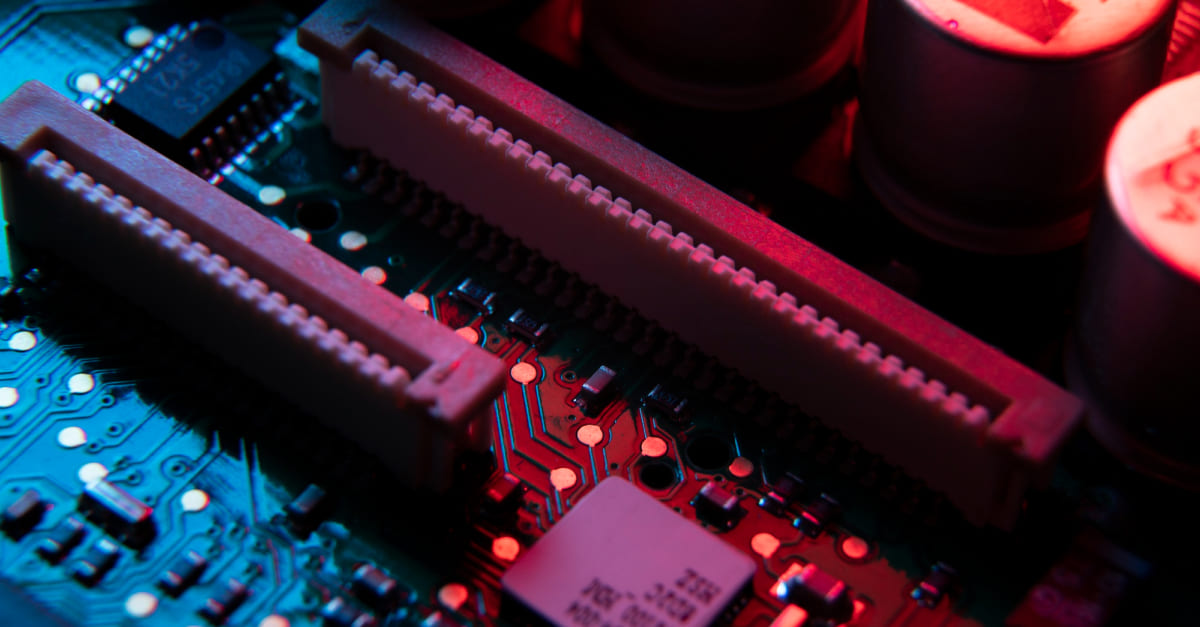

VRAM remains the nerve centre: it determines the maximum model size and the exploitable context‑window length.

Next comes quantization, a compression technique that can cut memory consumption by up to 4×, with a quality loss that is often imperceptible.

Finally, the context window plays a key role: the wider it is, the more Artificial Intelligence can process text simultaneously, from simple conversation to full‑document analysis.

To illustrate these principles, the article cites several reference points drawn from our work at LINAGORA:

- A model with 24 billion parameters requires roughly 48 GB of VRAM to run comfortably.

- For lighter use‑cases, a 7‑billion‑parameter model runs easily on an RTX 4060 or 4070.

- And beyond 30 billion parameters, we enter the realm of GPU clusters, with several H100 or A100 cards working in concert.

These quantified data underscore an obvious fact: the choice of infrastructure directly conditions digital sovereignty.

That is precisely what Michel‑Marie Maudet reaffirmed during the launch of Cap Digital’s IA—ction programme, when he was asked:

“How can we guarantee our autonomy on inference, and which infrastructure should we bet on?”

At LINAGORA, we work every day to answer this question concretely:

by developing a complete expertise in the at‑scale sizing of open and sovereign inference platforms, capable of efficiently running open‑weight—or even better, open‑source—models such as our European LLM LUCIE, locally on GPU or in a hybrid cluster.

The full article can be read for free on Journal du Net:

“LLM en local : comment choisir la bonne configuration matérielle ?”